Key Components of an Enterprise Data Platform:

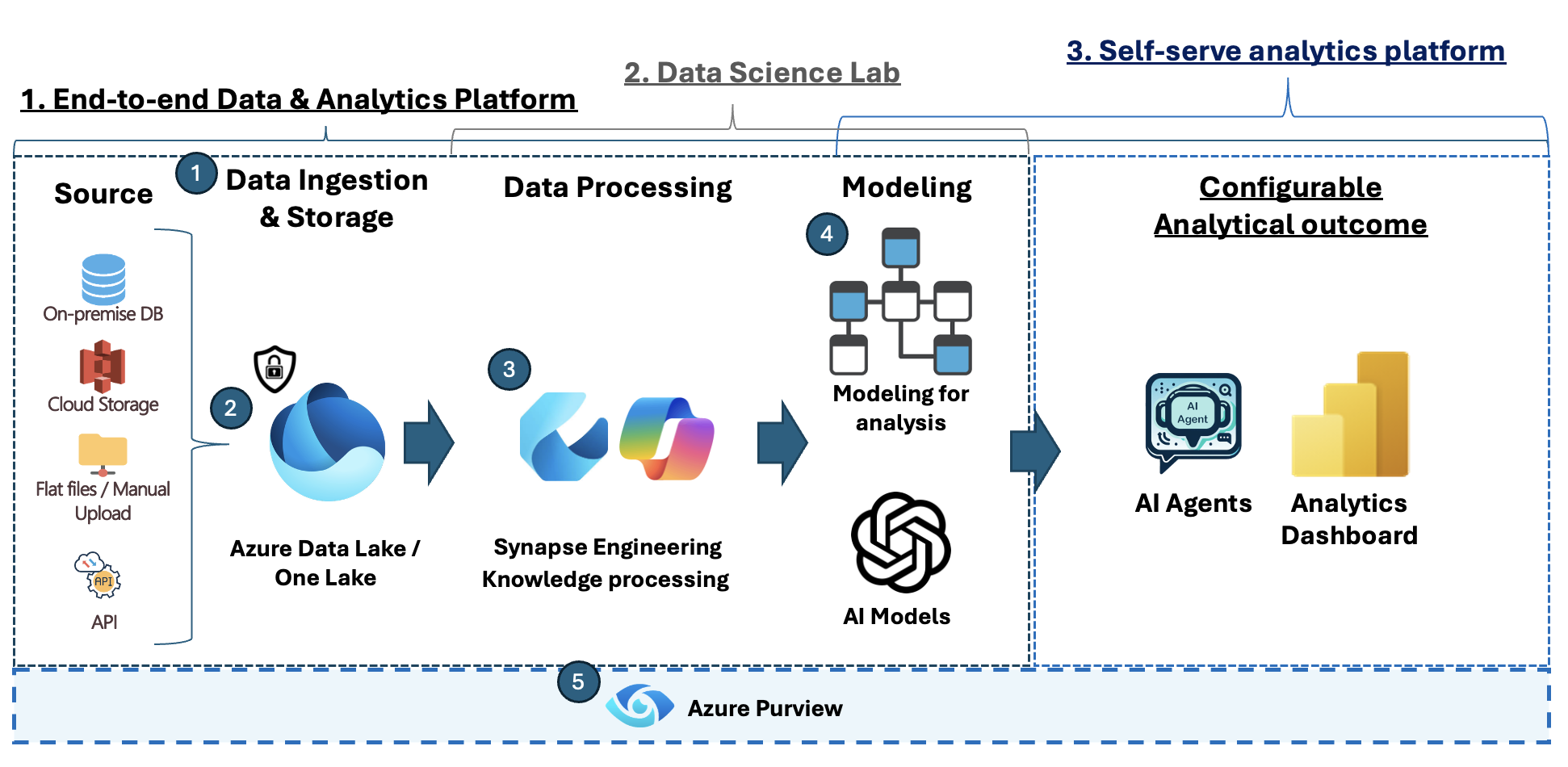

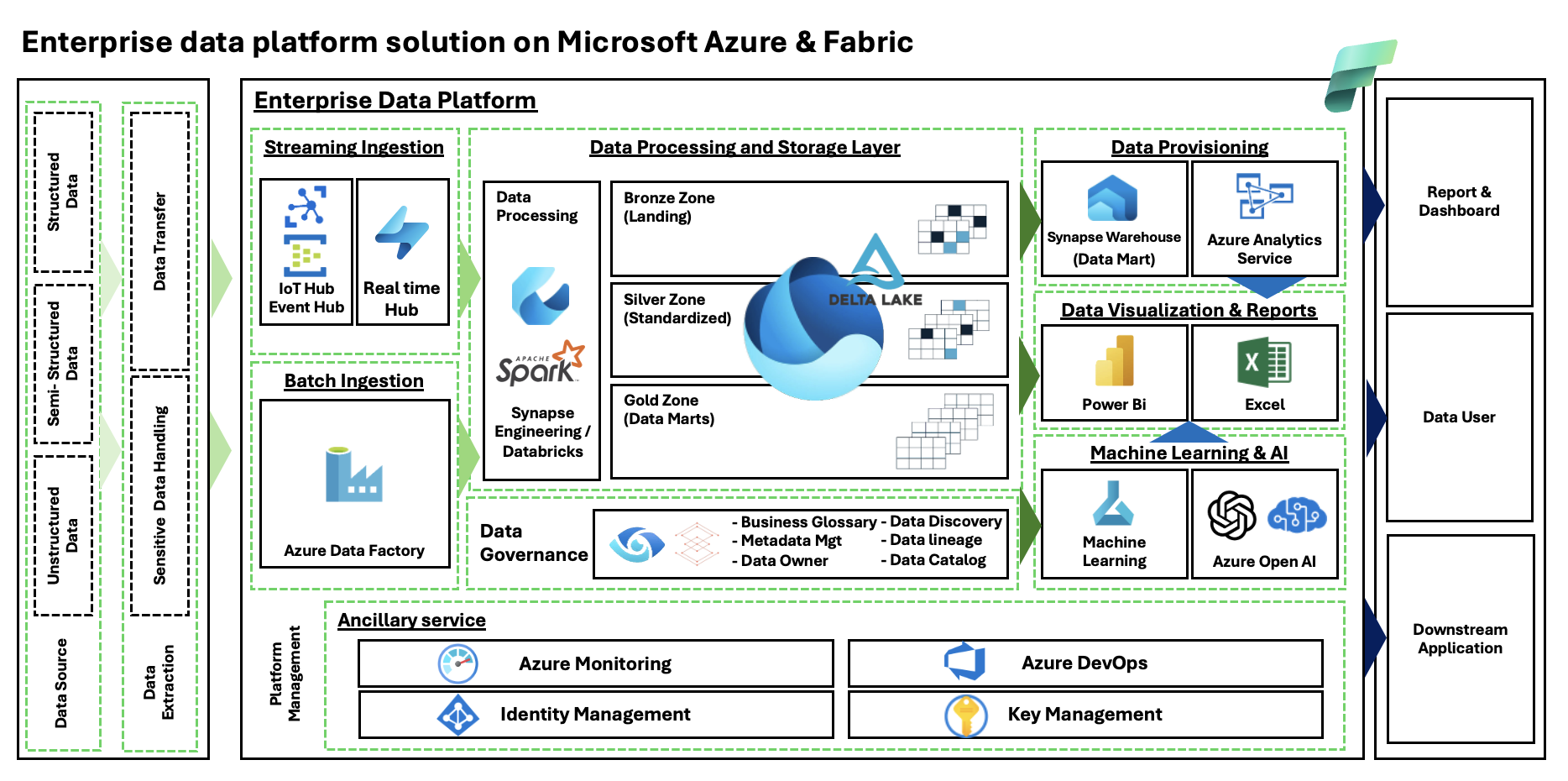

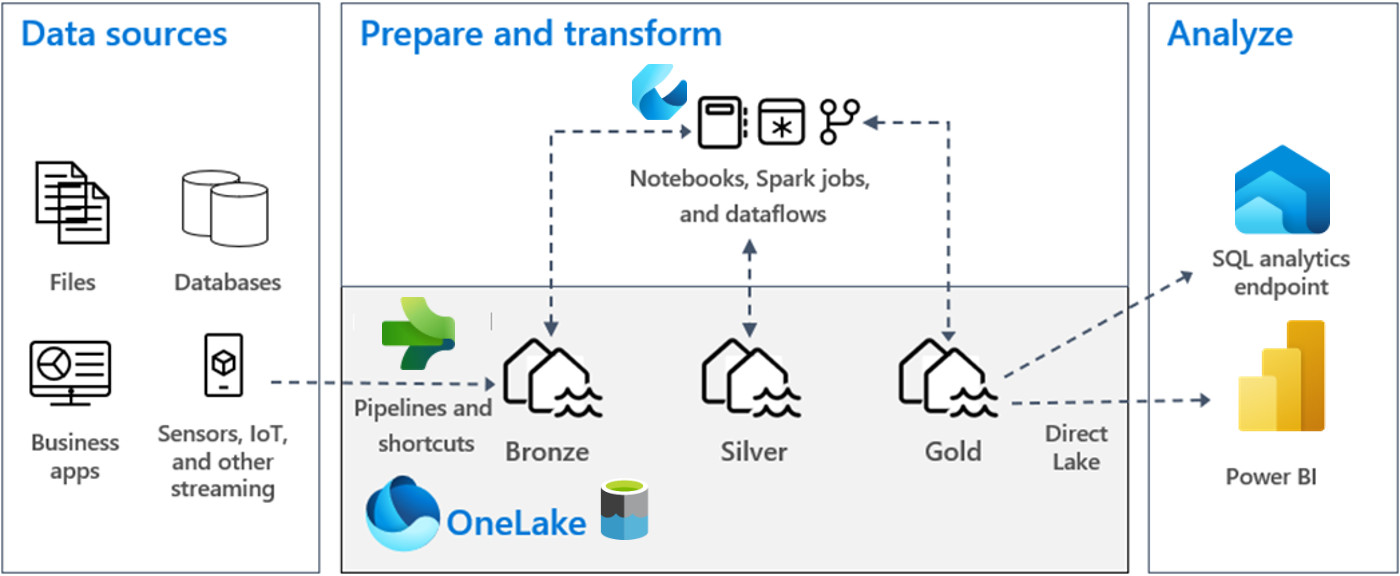

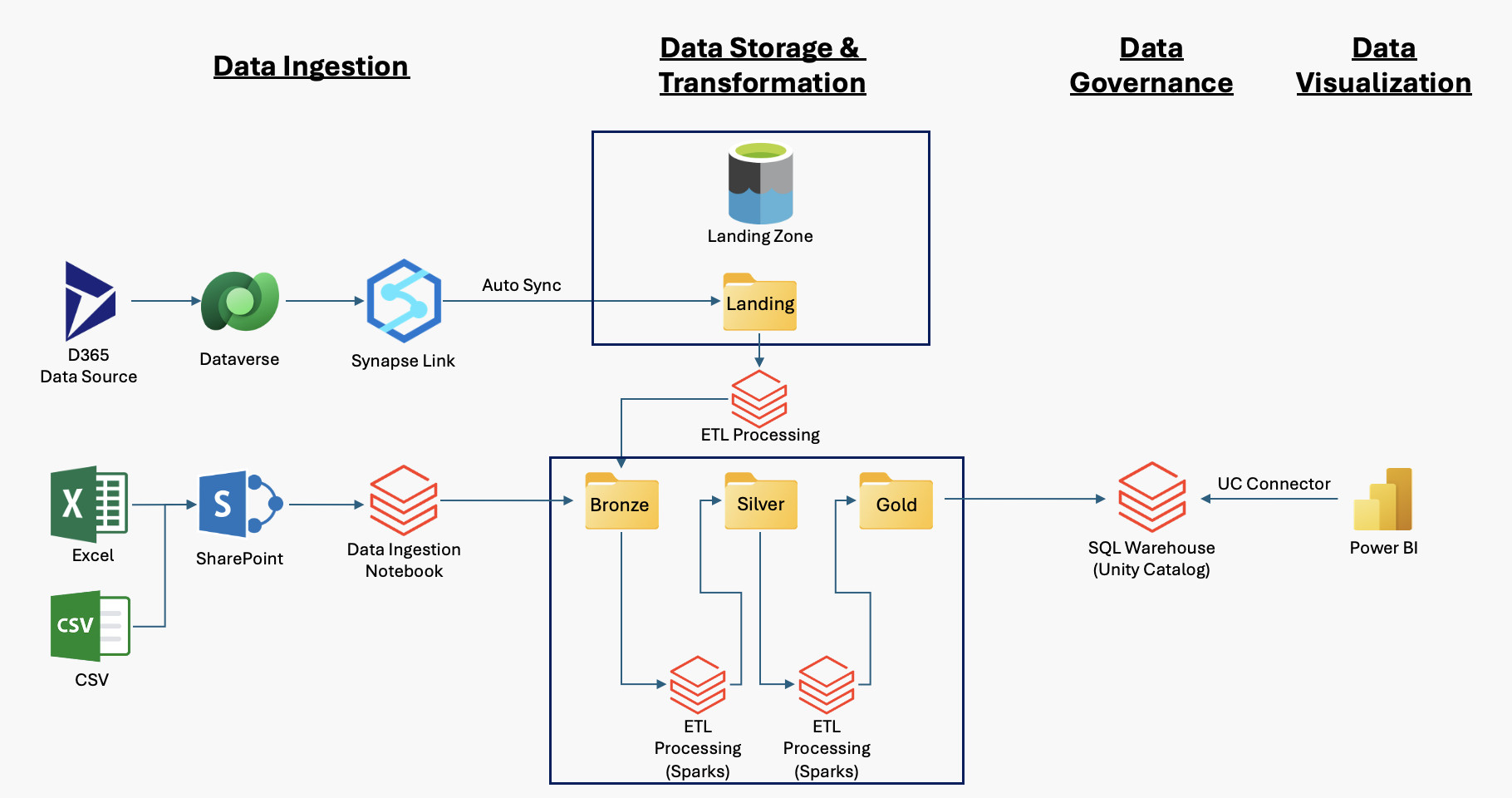

- Data Ingestion: Mechanisms to collect and import data from various sources (e.g., databases, APIs, IoT devices).

- Data Storage: Scalable storage solutions (like data lakes and warehouses) that accommodate structured and unstructured data.

- Data Processing: Tools and frameworks for processing data, including ETL (Extract, Transform, Load) and data transformation.

- Data Analytics: Capabilities for analyzing data, including business intelligence tools and machine learning frameworks.

- Data Governance: Policies and practices to manage data quality, security, and compliance.

AI ready: The data platform is ready to add the AI element as plugin.